Many organizations are thinking of migrating their on-premises SIEM to cloud, usually to Microsoft Sentinel, Google Chronicle or other solutions considered ‘cloud native’. In this article we’ll focus on Sentinel. Microsoft has created the Azure pricing calculator, which lets customers calculate the costs of their services with great convenience. However, customers approaching this transition with a lift-and-shift mindset, estimating the cost of Sentinel based on their current data volumes and usage, are likely displeased by the results. In this article, we’ll discuss some measures that can be made to make Sentinel go from a distant vision to a sensible and realistic option.

When migrating to a cloud-based SIEM it is likely that the cost of ingestion and storage will significantly increase which makes architecture, design and careful consideration of each log source even more important than earlier. We will propose multiple architectural alternatives for reduced cost, and provide a list of questions you may use as guidance whenever you plan on onboarding new log sources.

Minimize cost without sacrificing ability

What kind of strategy you pick when you are planning your SIEM will depend on different factors such as team size and skillset, risk appetite, budget and more. For most organizations the goal should be to minimize cost without sacrificing ability to detect and respond to security threats. Again, your capabilities should align with your risk appetite. Before we dive deeper into how we achieve our goal, let's establish a common understanding of log types.

There are a vast number of different log types that can be ingested into your SIEM, each with its own relevance for detecting and investigating security incidents. In this article, we have decided to divide them into three different categories:

- Processed events. These are pre-processed events and alerts you receive from other security tools. Examples include EDR, IAM and CNAPP.

- System logs. These are logs you might want to monitor in real-time for security incidents. You either plan on developing your own detections using them, or you frequently need them to verify other detections.

- High volume - investigation logs. These logs are not used for detection but you need them in cases where you have indicators of compromise, or you need to identify the root cause of an attack. Compliance may also be the reason for keeping these logs in retention.

Operating a SIEM efficiently can be a hard task for small and medium security teams. Gathering logs, normalizing data, developing and tuning detections can be very resource consuming, both in manpower and time. Building detections in the SIEM also drive cost because you need to ingest the logs your detections are based on. This is especially true for Sentinel, where logs used in real-time detection preferably should be ingested as analytic logs - the most expensive log plan in Log analytics.

A strategy to reduce costs is aiming to let source systems do the heavy lifting for detections where it is viable. Most security tools will generate alerts and recommendations based on their logs and many will let you create your own custom detections. Ingesting processed events from all your security tools will make your SIEM a single pane of glass for security monitoring while you can use cheaper alternatives to ingest and store raw-data. It is important to note that although we use cheaper alternatives, data should still be available to our analysts when needed. This is how we minimize cost without sacrificing ability.

Let’s say we receive an alert from Microsoft Defender for SQL about a possible SQL injection attack. In that situation we would likely want to investigate using logs from the PaaS resource which is a legitimate case for ingesting these logs. However, we might choose to use a cheaper alternative for ingestion as we are not planning on developing detections already covered by Defender for SQL.

Most systems don’t generate processed events though, and you will have to consider ingesting its logs into Sentinel for real-time monitoring. Consider Microsoft's commitment tiers if you are going to ingest more than 100GB of analytics logs per day. Microsoft also lets you ingest a number of data sources for free including Azure Activity Logs, Office 365 Audit Logs and alerts from pretty much all their security services. These are all high-value logs that you can ingest and query up to 90 days without any additional charges!

It is also worth noting that many organizations use a third-party SOC for monitoring logs in addition to their own internal SIEM solution. Close collaboration and communication can be a big cost saver if you uncover areas where you have duplicate detection. Layered security is a good thing, but you usually want it to be intentional. Also, you most likely don’t have to ingest data into Sentinel to export it to your external SOC.

Architectural considerations

O3 Cyber recently supported a customer with an incident where we leveraged Azure Data Explorer as part of the investigations. Amidst the analysis we came to the conclusion that ADX is a cheaper alternative to Sentinel that is suitable in some cases. It turns out that there has been done extensive research on the subject. We have not carried out any groundbreaking research as part of this article. We have set up the different architectures in a test environment, but we rely on other researchers' work and give credit where it is due.

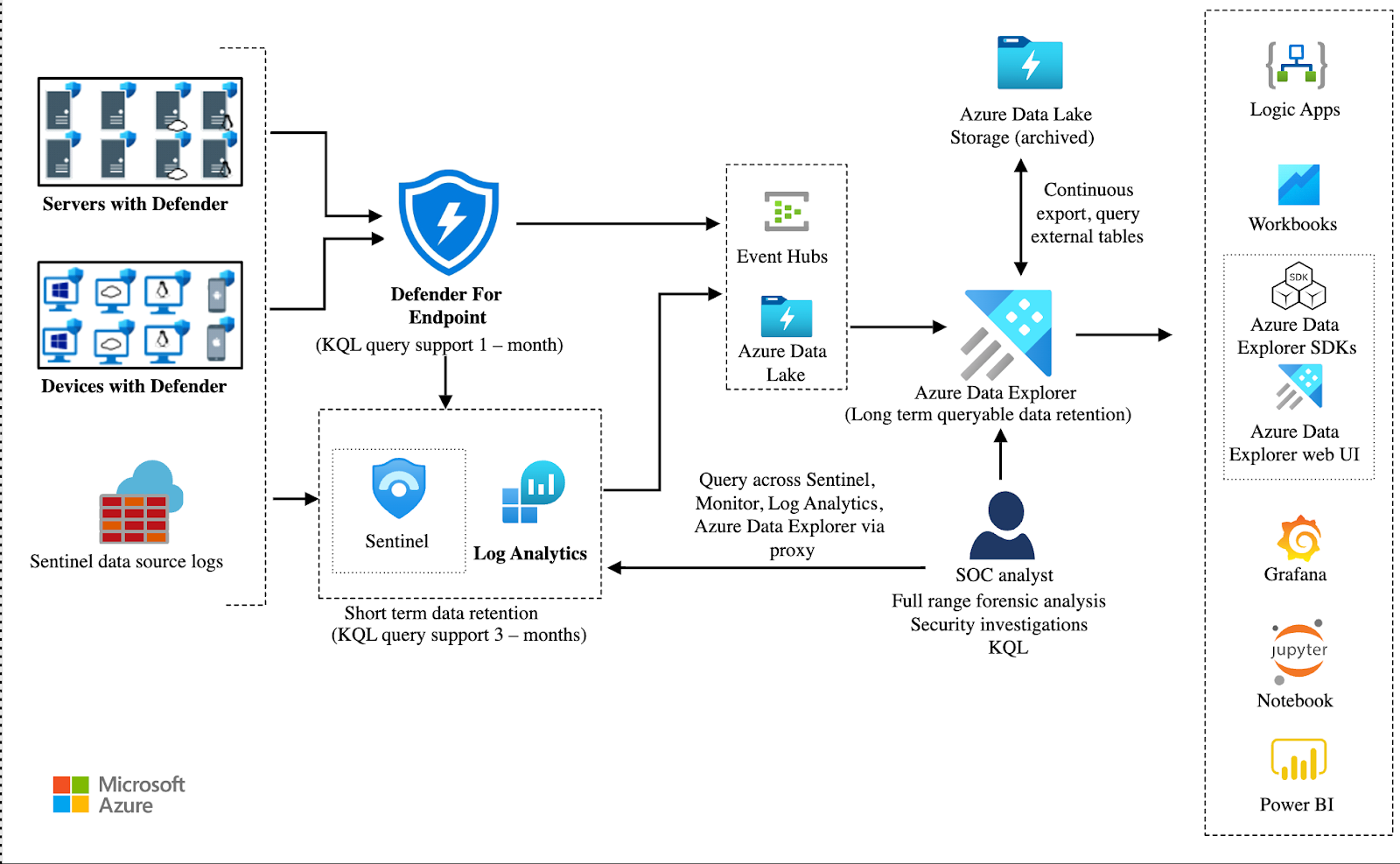

Javier Soriano wrote a blog post about using ADX for long-term log retention back in 2020. The article suggested exporting logs from Log Analytics to Event Hub and then to Azure Data Explorer.

Microsoft later introduced the native archive function in Sentinel, which made this solution a bit less relevant. There is however no clear cut answer to whether the native archive solution would be better than ADX for long-term retention, but at least is comes with a bit less architectural complexity. It is important to note though, not all logs with security relevance have to go through Sentinel. This is shown in the image from the article, as you can see data from Defender for Endpoint is streamed directly to Event Hubs and then to ADX, which is still highly relevant.

Koos Goossens has written this amazing two-piece article showing how he has implemented this architecture to export Defender for Endpoint data for multiple large enterprises. It includes extensive research on how to calculate data volumes and scale your components, a script to simplify the deployment and a real-world example of cost estimation from one of his customers.

It is definitely worth noting that the architecture comes with increased complexity and overhead when onboarding new log sources, but there are some big advantages which makes it worth exploring. One major benefit is that your analysts will have full KQL support once the data is in ADX.

Microsoft addressing concerns

Microsoft has introduced multiple tiers to Azure Monitor to address their customers' concern of high cost for log ingestion. In addition to analytic logs they now offer basic and auxiliary logs.

These plans come at a significantly lower price (0.13$ per GB for auxiliary and 0.65$ per GB for basic in West Europe) making Sentinel a lot more economically feasible for many organizations. However, there are some limitations worth mentioning.

Both basic and auxiliary logs have interactive retention for 30 days where you can query the data for a small charge. After the 30 days you may archive the data and then use search jobs and data restores whenever you need to perform investigations. Keep in mind that these operations can be quite costly, so if you have data you expect to query frequently, other options might be more suitable. Both plans give full KQL support on a single table, with lookup to analytic tables.

Microsoft has suggested a list of log types that are good use cases for basic/auxiliary logs. This list would also be a good starting point if you are considering ADX for long-term storage. Again, to maximize ROI, we should always strive to ingest logs into the cheaper alternatives as long as it does not negatively impact our detection capabilities.

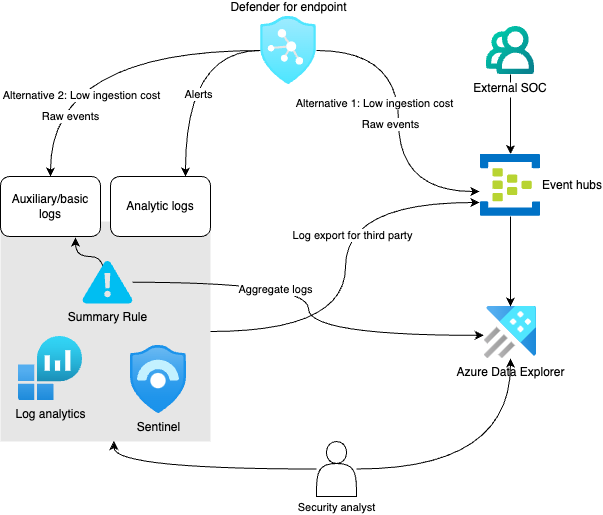

Summary rules

Summary rules are built-in functionality in Sentinel used to aggregate high-volume data where results are outputted to analytic tables. This allows near real-time threat detection in your high volume logs both for your basic/auxiliary logs and data in ADX. It also lets you create summarized reports and dashboards while keeping costs at a minimum.

Microsoft has some great docs covering this topic including examples showing how summary rules can be used for detection purposes.

The image below is an overview of the architecture we propose where systems export processed events to Sentinel using analytic tables while leveraging cheaper options for high-volume data. What option you use for high-volume logs may depend on support in the source system.

Guiding questions for log ingestion

Ask yourself these questions before ingesting a new datasource to identify the purpose, if there is an actual need, and how you will ingest and store the data.

- Is the source system already generating processed events based on the data we want to ingest?

- Does the source system allow for custom detections?

- Will the logs we are ingesting be used for one or more detections, and do we have similar detections in place in other tools?

- Do we have an external provider also monitoring these logs for security incidents? (Meaning layered detections)

- Are the detections we are developing providing value in terms of reduced risk greater than the cost of log ingestion?

- Could Summary rules be used for the detection we are developing?

- Could the logs we are ingesting be needed for future investigations or compliance, and if so, how long do we want to retain the data?

Conclusion

Lifting your on-premises SIEM to Sentinel can be a costly experience using a lift-and-shift approach. There are a few new ways of doing things you have to keep in mind, like different plans for ingestion and additional cost for queries and detections. However, there are design decisions and a few other measures that can be made to make Sentinel a sensible option.

In the article we propose an architecture where source systems do most of the heavy lifting for detection where possible, while only ingesting processed events and logs necessary for real-time detections as analytic logs. Cheaper alternatives should be used for high-volume log sources. Both the built-in multi-tier log plans and archive functionality in Sentinel as well as Azure Data Explorer were mentioned as reasonable options.

In the last part of the article we provide a list of guiding questions that can be used before ingesting new log sources. These questions can help you decide how logs should be ingested, stored and used to improve your ability to detect threats while keeping cost at a minimum.